Apache Hadoop vs Apache Spark

- Mister siswa

- 2022 November 21T13:41

- Hadoop

The corresponding architectural designs of Hadoop and Spark, how these big data frameworks compare in various circumstances, and which use cases each solution performs best in.

Hadoop and Spark are two well-known big data technologies that were developed by the Apache Software Foundation. In order to handle, manage, and analyze enormous volumes of data, each framework has access to a robust ecosystem of open-source tools.

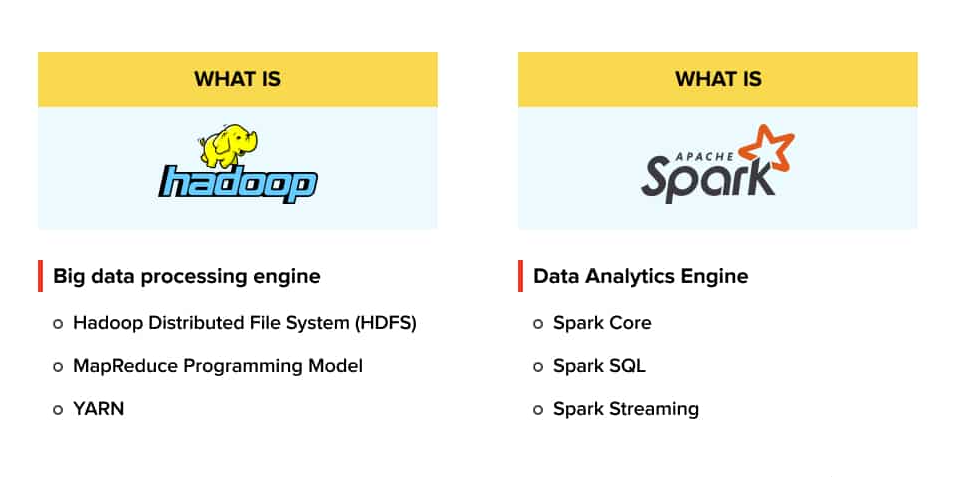

What is Apache Hadoop?

With the help of a network of computers (or "nodes"), Apache Hadoop is an open-source software tool that enables users to handle large data collections (ranging from gigabytes to petabytes). It is a highly scalable, economical system for storing and processing structured, semi-structured, and unstructured data, including logs from web servers, IoT sensors, and Internet clickstream records.

Here are some advantages of the Hadoop framework:

- Data security in the event of hardware failure

- incredibly large scalability, from one server to hundreds of computers

- Real-time analytics for reviewing the past and making decisions

What is Apache Spark?

Apache Spark — which also uses open source software, is a large data processing engine. Spark divides out huge jobs among several nodes, just like Hadoop. However, it often runs quicker than Hadoop and processes data using random access memory (RAM) rather than a file system. Spark can now handle use situations that Hadoop is unable to.

Among the advantages of the Spark framework are the following:

- a single engine for processing graphs, machine learning (ML), streaming data, and SQL queries

- Using in-memory processing, disk data storage, etc., it can do smaller workloads 100 times quicker than Hadoop.

- Data transformation and semi-structured data manipulation made simple using APIs

The Hadoop ecosystem

Advanced analytics, such as predictive analysis, data mining, machine learning (ML), etc., are supported by Hadoop for stored data. It makes it possible to divide up huge data analytics processing duties into smaller activities. The little jobs are dispersed over a Hadoop cluster and completed in parallel using an algorithm (such as MapReduce) (i.e., nodes that perform parallel computations on big data sets).

There are four main components that make up the Hadoop ecosystem:

- Hadoop Distributed File System (HDFS): A main data storage system that operates on affordable hardware and manages big data volumes. Additionally, it offers great fault tolerance and high-throughput data access.

- The cluster resource management known as Yet Another Resource Negotiator (YARN) schedules tasks and distributes resources (such as CPU and memory) to applications.

- Big data processing jobs are divided into smaller ones using Hadoop MapReduce, which then executes each task after distributing the smaller tasks among many nodes.

- The set of shared utilities and libraries known as Hadoop Common (Hadoop Core) is a prerequisite for the other three modules.

The Spark ecosystem

The only processing framework that integrates data with artificial intelligence is Apache Spark, the greatest open-source project in data processing (AI). This enables users to execute cutting-edge machine learning (ML) and artificial intelligence (AI) algorithms after performing extensive data transformations and analysis.

There are five main modules that make up the Spark ecosystem:

- Spark Core: Underlying execution engine that organizes input and output (I/O) activities, arranges tasks, and dispatches them.

- To help users improve the processing of structured data, Spark SQL collects information about the data.

- Both Spark Streaming and Structured Streaming enhance the possibilities of stream processing. Data from several streaming sources is divided into micro-batches and streamed continuously using Spark Streaming. The Spark SQL-based Structured Streaming technology decreases latency and makes programming easier.

- Machine Learning Library (MLlib): A collection of scalable machine learning algorithms and tools for feature selection and ML pipeline construction. DataFrames, the main API for MLlib, provide consistency across several programming languages including Java, Scala, and Python.

- GraphX is an approachable computing platform that enables interactive creation, editing, and analysis of scalable, graph-structured data.

Comparing Hadoop and Spark

Spark is a MapReduce improvement for Hadoop. Spark and MapReduce vary primarily in that Spark processes data in memory and keeps it there for following stages whereas MapReduce processes data on storage. As a result, Spark's data processing rates are up to 100 times quicker than MapReduce's for lesser workloads.

Additionally, Spark builds a Directed Acyclic Graph (DAG) to plan jobs and coordinate nodes throughout the Hadoop cluster as opposed to MapReduce's two-stage execution procedure. Fault tolerance is made possible by this task-tracking procedure, which reapplies recorded processes to data from a previous state.

Let's examine the major distinctions between Hadoop and Spark in six crucial situations:

- Performance: Spark employs random access memory (RAM) rather than reading and storing intermediate data to disks, which makes it quicker. Hadoop stores data from several sources and uses MapReduce to process it in batches.

- Cost: Because Hadoop uses any sort of disk storage for data processing, it operates more cheaply. Because Spark depends on in-memory calculations for real-time data processing and needs a lot of RAM to spin up nodes, it operates more expensively.

- Processing: Although both technologies work in a distributed environment to handle data, batch processing and linear data processing are best suited to Hadoop. Spark is excellent for processing unstructured data streams that are being produced in real-time.

- Scalability: Using the Hadoop Distributed File System, Hadoop swiftly scales up to meet demand when data volume increases quickly (HDFS). The fault-tolerant HDFS is then used by Spark to store enormous amounts of data.

- Security: Spark improves security with shared secret authentication or event logging, whereas Hadoop makes use of a variety of authentication and access control techniques. Despite the fact that Hadoop is generally more safe, Spark may be integrated with Hadoop to increase security.

- Spark is the best platform for machine learning (ML) because it has MLlib, which does iterative in-memory ML calculations. Additionally, it consists of tools for pipeline creation, assessment, persistence, classification, regression, and more.

Misconceptions about Hadoop and Spark

Common erroneous beliefs about Hadoop

- Hadoop is affordable Despite being open source and simple to set up, maintaining the server can be expensive. Big data management may cost up to $5,000 USD when employing technologies like in-memory computing and network storage.

- As a database, Hadoop: Although Hadoop is used to manage, store, and analyze distributed data, fetching data does not entail any queries. As a result, Hadoop becomes a data warehouse as opposed to a database.

- Hadoop is ineffective for SMBs since "big data" is not just for "large corporations." Simple Hadoop features like Excel reporting make it possible for smaller businesses to utilize its capabilities. The performance of a small business can be significantly improved by having one or two Hadoop clusters.

- Although Hadoop administration at the upper levels is challenging, there are several graphical user interfaces (GUIs) that make programming for MapReduce simpler.

Common erroneous beliefs about Spark

- Although Spark effectively uses the least recently used (LRU) algorithm, it is not a memory-based technology. Spark is an in-memory technology.

- Although Spark can outperform Hadoop by up to 100 times for small workloads, according to Apache, it generally only outperforms Hadoop by up to 3 times for large workloads.

- New data processing technologies are introduced by Spark: Although the LRU technique and data processing pipelines are used efficiently by Spark, these features were previously only available in massively parallel processing (MPP) databases. But Spark differs from MPP in that it is open-source focused.

Hadoop and Spark use cases

The following examples best demonstrate the overall usefulness of Hadoop versus Spark based on the comparative analysis and factual data presented above.

use cases for Hadoop

Hadoop works well in situations including the following:

- processing large data sets in situations when memory is limited by data size

- Executing jobs in batches while using disk read and write operations

- constructing data analysis infrastructure on a tight financial budget

- doing non-time-sensitive tasks

- examination of historical and archived data

Spark use cases

Spark works well in situations including the following:

- employing iterative methods to handle simultaneous operation chains

- Quick results from in-memory calculations

- Analyzing live stream data analysis

- All ML applications use graph-parallel processing to model data.

Hadoop, Spark and IBM

IBM provides a variety of tools to assist you in maximizing your big data management endeavors and accomplishing your overall business objectives while utilizing the advantages of Hadoop and Spark:

- On a common, shared cluster of resources, IBM Spectrum Conductor, a multi-tenant platform, delivers and maintains Spark alongside other application frameworks. Spectrum Conductor can run numerous current and different Spark and other framework versions concurrently and provides workload management, monitoring, alerting, reporting, and diagnostics.

- A hybrid SQL-on-Hadoop engine called IBM Db2 Large SQL provides a single database connection and enables sophisticated, secure data searches across Hadoop HDFS and WebHDFS, RDMS, NoSQL databases, and object stores, among other big data sources. For ad hoc and sophisticated queries, users profit from low latency, high speed, data security, SQL compatibility, and federation capabilities.

- Cloudera Data Hub, Hortonworks Data Platform, IBM, Amazon S3 and EMR, Microsoft Azure, OpenStack Swift, and Google Cloud Storage Hadoop clusters are all combined via IBM Big Replicate. Big Replicate offers a single virtual namespace for clusters and cloud object storage that are located everywhere in the world.